This term’s topic for the practical course sketching with hardware is “Sensory Extension”. It is linked to the ERC Project AMPLIFY (https://amp.ubicomp.net/).

The overall task is to build a hardware sketch (a functional system that helps to communicate the idea) of a system that extends or amplifies human perception. The vision of AMPLIFY is described in [1] and [2]. It relates to using sensors and actuators to amplify, extend, or substitute human sensory impressions. Ideas range from additionally sensor modalities to accessibility tools and bio-feedback devices.

Some ideas for inspiration:

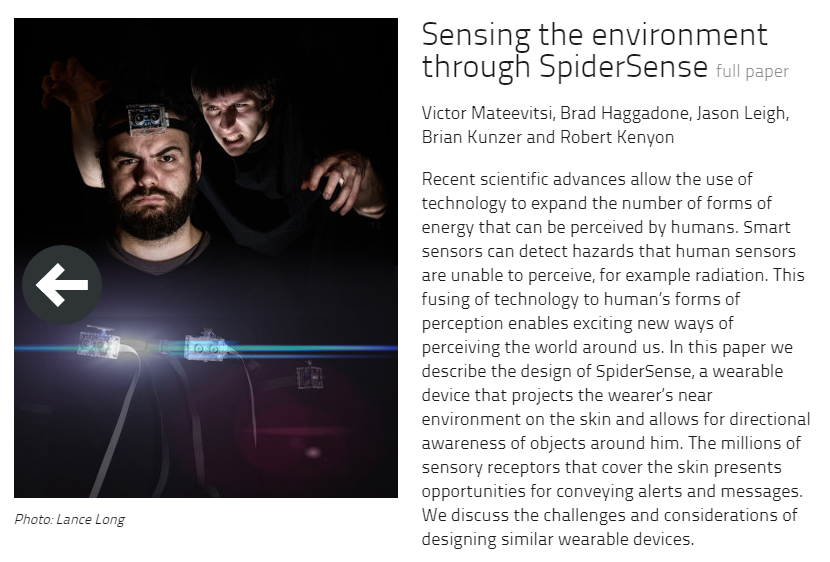

- SpiderSense as Demoed at the augmented human conference 2013 [3].

- Since we are not walking barefoot we lost the perception of whether the ground is hot or cold, wet or dry, …

- Can we have glasses that signal to another person that they are to close for comfort?

- How can we use information about pulse/respiration/blood oxygen levels for wellbeing?

- Can we develop a sense for air quality? Do we feel dangerous situations?

As this is a course in hardware sketching we want students to pick a topic they are excited about – our definition of sensory extension is broad!

Learning goals:

- build a system that includes: hardware and electronics, software, and physical design

- learn about trade-offs in prototyping interactive electronics

- understand how to debug systems that include electronics and code (and potentially network connectivity)

- how to iterate when making physical designs

- learning how a hardware sketch can be used to communicate an idea

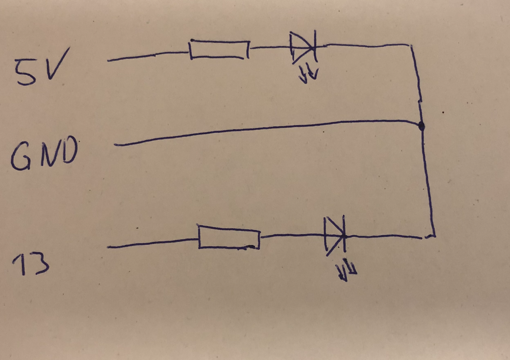

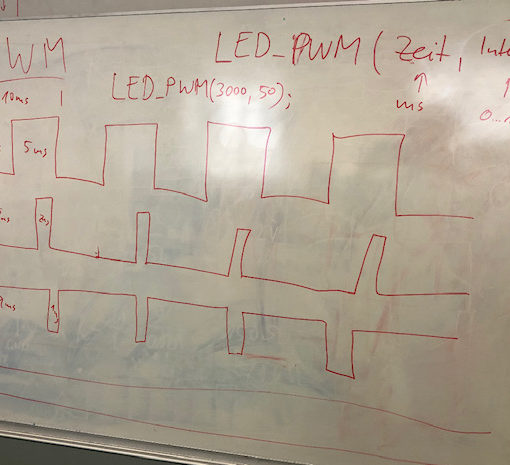

- programming Arduino

- basic electronics

We argue that functional prototype are an important research tool – for more details see [4].

References:

|

[1] Albrecht Schmidt, “Technologies to Amplify the Mind,” in Computer, vol. 50, no. 10, pp. 102-106, 2017. [DOI] [PDF] |

|

[2] Albrecht Schmidt. 2017. Augmenting Human Intellect and Amplifying Perception and Cognition. IEEE Pervasive Computing 16, 1 (January 2017), 6–10. [DOI] [PDF] |

|

[3] Victor Mateevitsi, Brad Haggadone, Jason Leigh, Brian Kunzer, and Robert V. Kenyon. 2013. Sensing the environment through SpiderSense. In Proceedings of the 4th Augmented Human International Conference (AH ’13). Association for Computing Machinery, New York, NY, USA, 51–57. DOI:https://doi.org/10.1145/2459236.2459246 |

| [4] Albrecht Schmidt. 2017. Understanding and researching through making: a plea for functional prototypes. interactions 24, 3 (April 2017), 78–81. DOI:https://doi.org/10.1145/3058498 |